1 month and $4,016.42 later

Claude Code after 1 month and $4,016.42

I spent $4,016.42 worth of API calls on Claude Code in one month. Thanks to the Max plan, I only paid $200.

After 1 month with Claude Code, here's what I learned.

What Claude Code Actually Is

Claude Code is Anthropic's command-line tool for AI-powered coding. It's essentially a thin wrapper around the Claude model that lets you interact at a very low level. No IDE integration, no fancy UI - just a terminal interface that reads files, writes code, and executes commands.

This minimal approach feels surprisingly freeing. You're talking directly to the model without layers of abstraction, and the performance seems better than more integrated tools. The model has full context awareness of your codebase and can take actions directly.

Check out the official documentation for setup details, and the recent SDK announcement if you want to build on top of it.

The Numbers

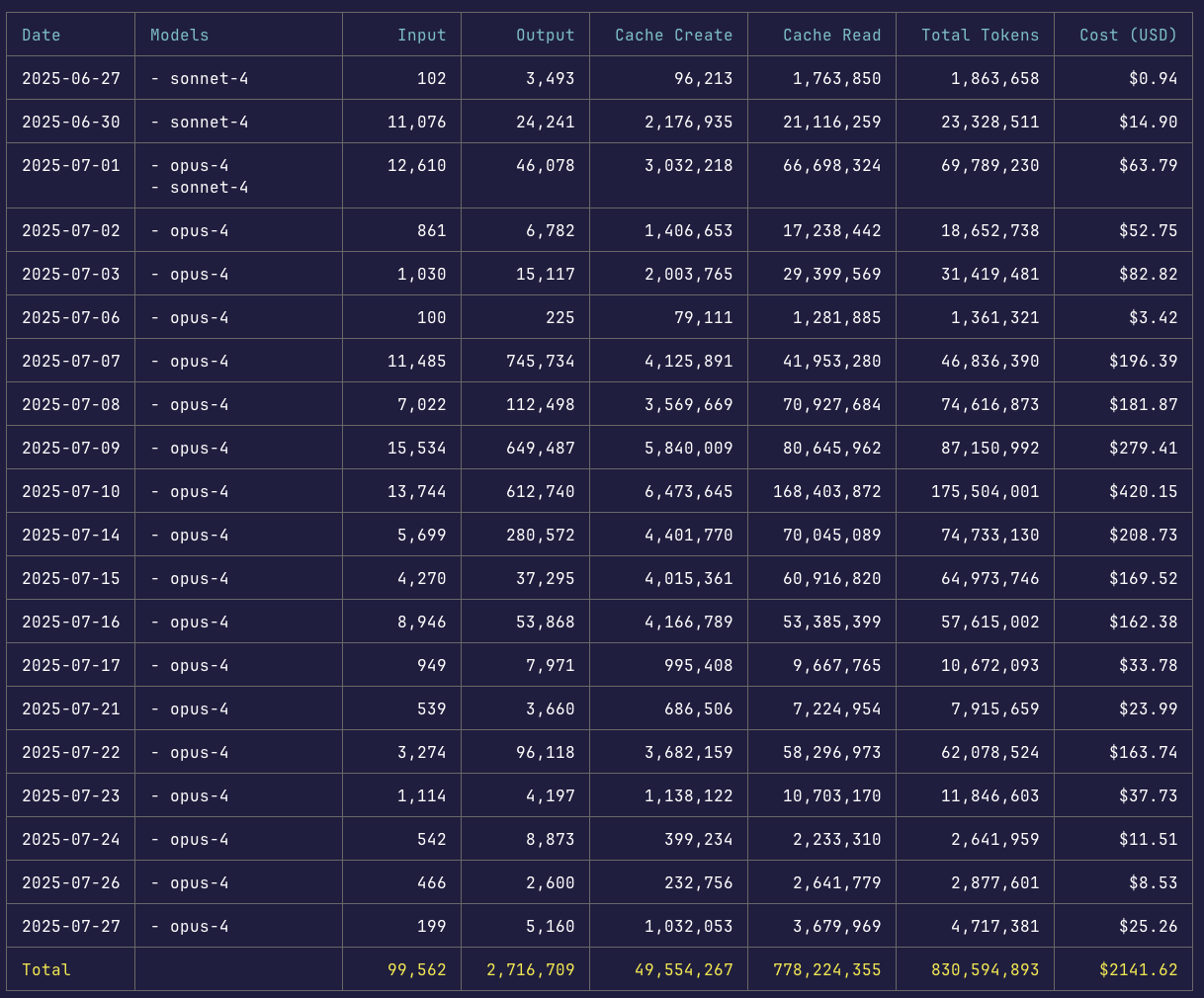

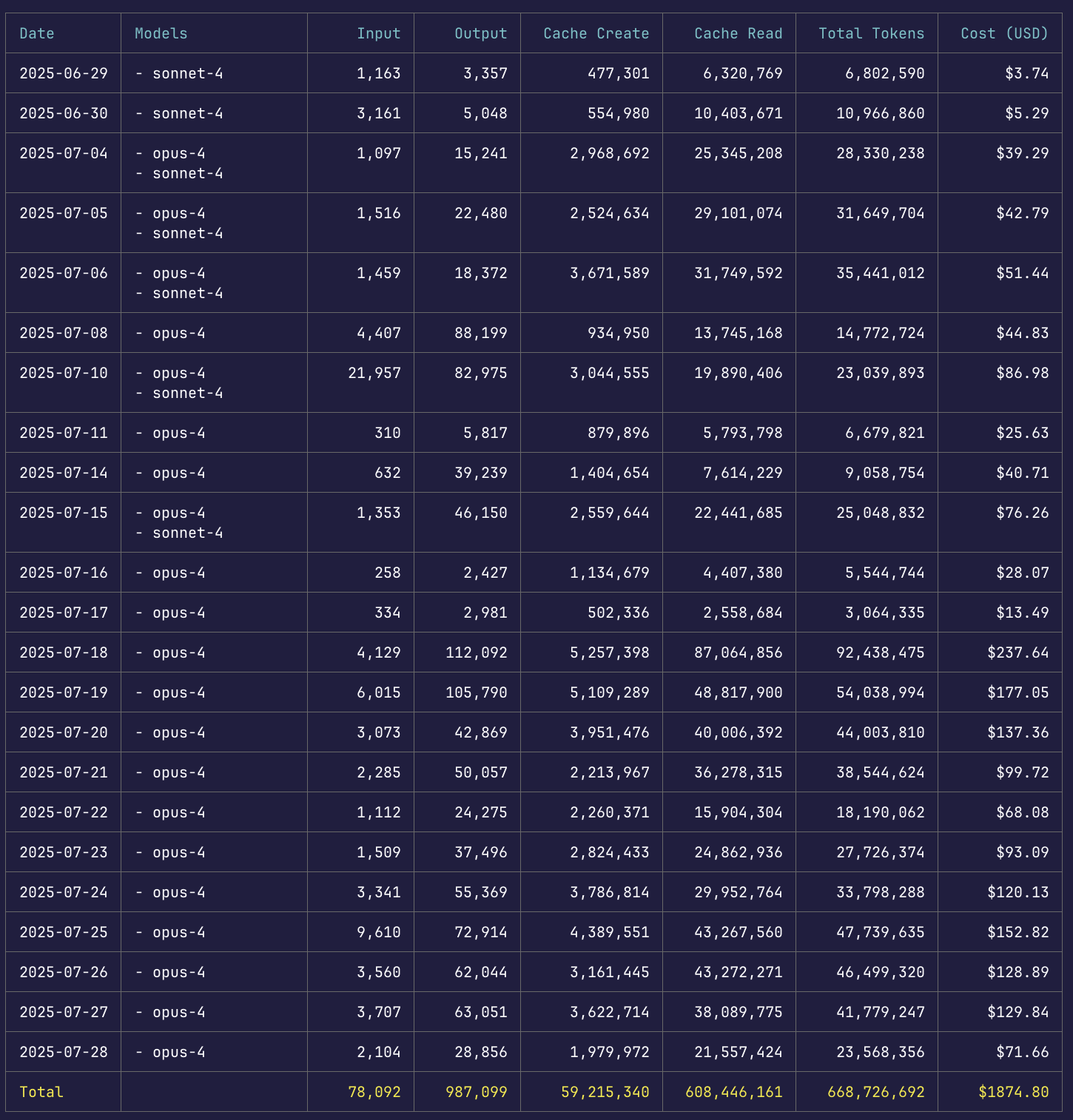

- Total API usage: $4,016.42

- What I paid: $200/month (Max plan with 20x usage)

The economics completely change with the Max plan. That $4,016.42 in API calls cost me $200.

Core Strengths

Context Awareness at Scale

Claude Code processes your entire codebase to understand patterns and conventions - but here's how it actually works: just like a developer would search through code, Claude uses grep commands to find relevant files and patterns. It spins up temporary subagents through Task tool calls to do this searching. These subagents find what's needed, then pass that information back to the main agent. This agent-to-agent communication keeps the main context clean while still gathering comprehensive codebase knowledge.

When I asked it to add error handling to an API endpoint, it found my existing error handler utility, matched our error message format, used appropriate status codes based on similar endpoints, and updated the API documentation.

The CLAUDE.md File

CLAUDE.md files are the key to consistency. Use /init to generate one automatically with codebase documentation, then customize it. Place this in your project root, and Claude references it in every interaction:

# Key Commands

- npm run dev - Start development server

- npm run check - Typecheck + lint (run before commits)

- npm run test:single - Test single file (faster than full suite)

# Architecture Notes

- We use server components by default

- Client components only when needed for interactivity

- All API routes follow /api/v1/[resource] pattern

- Database queries use Drizzle, not raw SQL

# Style Guide

- No semicolons (Prettier handles this)

- Prefer early returns over nested ifs

- Comment WHY, not WHAT

This ensures Claude writes code matching your conventions from the start.

Complex Refactoring

Example: migrating authentication from sessions to JWTs with backward compatibility. Traditional timeline: three days. Claude Code: four hours.

Me: Migrate our auth from sessions to JWT. Keep backward compatibility for 30 days.

Claude: *reads 47 files*

*creates migration plan*

*identifies risk areas*

*waits for approval*

Me: Proceed with the implementation.

Claude: *updates auth middleware*

*modifies 23 API endpoints*

*implements compatibility layer*

*updates test suite*

*runs all tests*

*fixes discovered issues*

Complete. All tests passing.

The Three-Agent Maximum

After extensive testing with parallel agents, I strongly recommend using no more than three agents running in parallel.

Here's why: when you have multiple agents passing information between each other, context gets lost, leading to misaligned outputs and conflicting decisions. I learned this the hard way when I deployed 7 subagents to write test cases for a legacy project. Half the tests followed one format, the other half used a completely different approach.

The core issue is implicit decisions. When agents work in parallel, they make countless small choices - coding style, variable naming, architectural patterns - that clash when combined. Claude Code does try to mediate these conflicts, but you need extremely specific instructions in your plan to make it work.

Insights from Context Engineering

Building on Walden Yan's research on context engineering, here are the key principles I've found:

The telephone game kills agent systems. Every handoff between agents loses crucial context. What starts as "implement user authentication with OAuth" becomes increasingly distorted as it passes through multiple agents.

Context is king, not prompts. Models are already smart enough. The limitation isn't clever prompting but how much context they have about your task and previous actions. A mediocre prompt with complete context beats a perfect prompt with partial context.

We're in the HTML era of agent design. Current frameworks are premature - we're still discovering fundamental principles of reliable agent systems, similar to web development before React. Expect today's patterns to look primitive in hindsight.

Good systems feel like one consciousness. Even with complex underlying components, a well-engineered agent system should present itself as a single, coherent entity with continuous decision-making.

Context compression is necessary but dangerous. As tasks grow, you'll hit token limits and need to compress context. This introduces failure points when important details get lost. Plan for this from the start.

Universal tools beat specialized ones. Give your agents powerful general-purpose tools like shell access rather than narrow integrations. A bash command with an API key can do almost anything.

Linear workflows consistently outperform parallel ones. A single agent maintaining full awareness of previous decisions produces better results than multiple agents with partial context. If you must use parallel agents, limit them to read-only tasks like documentation searches or approach analysis.

For those experimenting with parallel workflows, I'd suggest trying the conductor pattern: one agent creates a plan (o3 excels at this - it creates logical, rigid plans), a second agent reviews it for correctness and overengineering, then the first agent implements the approved plan.

Practical Workflows

1. Environment Setup

Create a comprehensive CLAUDE.md file. The /init command generates a starter, but customize it for your needs.

2. Explore-Plan-Code-Commit

You: Analyze the user authentication system and document how login currently works.

Claude: *explores relevant files and documents findings*

You: Based on that analysis, create a plan to add OAuth without disrupting existing users.

Claude: *develops implementation strategy*

You: Execute the plan, but hold off on committing.

Claude: *implements changes and runs tests*

You: If everything passes, create a descriptive commit.

3. Be Specific

Vague: "Add user management"

Better: "Create user management following PostList.tsx pattern. Include pagination, search, role filtering, and bulk actions. Use our standard data table component."

4. Reset Context Often

Run /clear between distinct tasks. Claude's context window fills up, and performance degrades with irrelevant information.

Limitations

Usage Management

The Max plan's 20x limit requires strategic thinking. Batch related tasks instead of making isolated requests. Five UI tweaks become one comprehensive session.

Hallucinations

Claude sometimes invents modules. Last month: @/lib/quantum-cache with detailed explanations of "advanced caching strategies." Always verify imports.

Scope Creep

A simple bug fix might become a full refactor. Use explicit constraints: "Fix ONLY the error handling in lines 42-47. Make no other changes."

The SDK

The Claude Code SDK enables custom integrations:

- Git hooks for automated code review

- PR descriptions from actual diffs

- Business logic-aware linting

- Test generation from implementations

Should You Use It?

Yes if you:

- Ship features independently

- Prioritize velocity

- Have good test coverage

- Can adapt workflows

No if you:

- Work on safety-critical systems

- Have tight budgets

- Need absolute control

- Lack adaptation time

The Official Guide

Anthropic recently published "Claude Code: Best practices for agentic coding" which goes deep on optimization techniques. A few highlights I've found particularly useful:

The # key shortcut - Press # to give Claude an instruction that automatically gets added to your CLAUDE.md file. Great for documenting commands and patterns as you discover them.

Thinking modes - Ask Claude to make a plan for how to approach a specific problem. Use the word "think" to trigger extended thinking mode, which gives Claude additional computation time to evaluate alternatives more thoroughly. These specific phrases are mapped directly to increasing levels of thinking budget: "think" < "think hard" < "think harder" < "ultrathink." Each level allocates progressively more thinking budget.

But use ultrathink sparingly - research shows that excessive thinking time can lead to overengineering and worse output through "inverse scaling in test-time compute." If the plan seems reasonable, have Claude create a document or GitHub issue with it so you can reset to this spot if the implementation doesn't work out.

Custom slash commands - Store prompt templates in .claude/commands/ for repeatable workflows. For example, create a /fix-github-issue command that pulls issue details and implements the fix.

Tool allowlists - Use /permissions to customize which tools Claude can use without asking. Add frequently used commands like Edit or Bash(git commit:*) to streamline your workflow.

Moving Forward

Six months ago, AI coding tools were toys. Today, Claude Code handles production systems. The gap closed fast.

Engineers who learn AI collaboration will outpace those who don't. The advantage comes from human creativity multiplied by AI execution.

Start with /init on your existing project to generate a CLAUDE.md starter, then customize it based on your specific needs. Or write your own CLAUDE.md from scratch - there's no required format, just document what helps Claude understand your codebase. Try the explore-plan-code-commit workflow. See what happens.

July 27th, 2025